Reward-Augmented Decoding: Efficient Controlled Text Generation With a Unidirectional Reward Model

Haikang Deng, Colin Raffel

EMNLP, 2023, Singapore

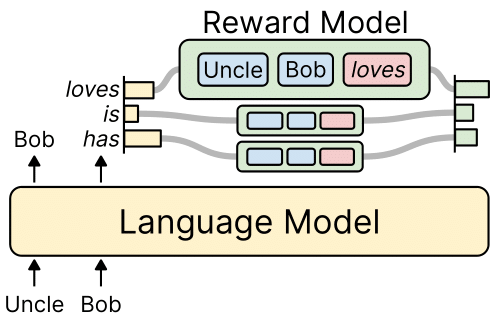

While large language models have proven effective in a huge range of downstream applications, they often generate text that is problematic or lacks a desired attribute. In this paper, we introduce Reward-Augmented Decoding (RAD), a text generation procedure that uses a small unidirectional reward model to encourage a language model...

[Read More]